Hello everyone. It’s been sometime since I last posted. This blog shares my experience in the IBM TGMC contest for College students.

The contest starts by providing a set of case studies like smart city, green planet etc which are some of IBM’s ideologies on making this world a better place to live.

So, there is an initial meetup between the IBM guys and college HOD’s , staff, students where there is an expostulation of IBM tech trends and software such as IBM DB2, IBM Websphere server, Rational development suite for apps etc, this serves as an initial session to set up a good wrappout .

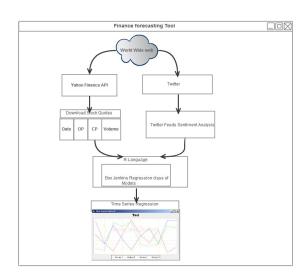

Following this, teams register online in batches of 3 to 5 , choose their app .Mentors can be chosen both from the College faculty as well as from IBM.To gauge the interest, level and skill of the students and to rationalize the expected skill set from the existing one , intermediate performance assessments are done such as submission of use case diagrams, synopsis, design document etc,Working on this project made me aware of IBM parlance such as Business logic, database servers vs databases!! , integrated development and deployment framework etc.

This was followed by the actual coding and testing of the app , packaging the code as EAR/ WAR and submitting it for review by IBM.This is the basis for short-listing the top 50 teams or so who will make it to successive rounds.

So, I received a certificate of appreciation from IBM for implementing the case study on E- COPS. It was great fun and a absolutely awesome exposure to the practical side of coding apps with appropriate industry level exposure.

Like-minded geeks can find my certificate and docs attached. So, start Apping….

E-COPS

TGMC-cert

Navigating the Seas of Enterprise Data Management

In the ever-evolving landscape of business, data has emerged as a valuable asset that can fuel growth, innovation, and strategic decision-making. The effective handling of vast amounts of data is paramount for organizations to stay competitive and agile. This is where Enterprise Data Management (EDM) steps in, providing a structured approach to harnessing, organizing, and leveraging data across the enterprise.

The Foundation: Data Governance

At the heart of any successful EDM strategy lies robust data governance. It sets the rules, policies, and procedures for managing data throughout its lifecycle. By establishing clear ownership, defining data quality standards, and ensuring compliance with regulations, organizations can build a solid foundation for effective data management.

Centralized Data Repositories

One key aspect of EDM is the creation of centralized data repositories. These repositories serve as secure, organized hubs for storing and accessing data. Implementing a centralized data storage solution not only enhances data accessibility but also facilitates efficient data integration across various departments, reducing silos and promoting collaboration.

Data Quality Assurance

Data quality is non-negotiable in the world of EDM. Poor-quality data can lead to misguided decisions and operational inefficiencies. A comprehensive data quality assurance process involves regular audits, cleansing, and validation to ensure that the data within the enterprise is accurate, consistent, and reliable. This commitment to data quality enhances trust in the information used for decision-making.

Master Data Management (MDM)

MDM is a critical component of EDM, focusing on the identification and management of master data—core business entities such as customers, products, and employees. By establishing a single, accurate, and consistent version of master data, organizations can improve operational efficiency, reduce errors, and enhance the overall reliability of their data.

Data Security and Compliance

As data breaches become more sophisticated and prevalent, ensuring the security of enterprise data is paramount. EDM includes robust security measures to protect sensitive information, incorporating encryption, access controls, and regular security audits. Moreover, compliance with data protection regulations, such as GDPR or HIPAA, is integral to safeguarding the organization against legal repercussions and reputational damage.

Scalability and Flexibility

The business landscape is dynamic, and so should be the data management strategy. Scalability and flexibility are crucial aspects of EDM, allowing organizations to adapt to changing business requirements and technological advancements. Cloud-based solutions, for example, provide the scalability needed to handle growing volumes of data, while also offering the flexibility to integrate with emerging technologies.

Continuous Improvement through Analytics

EDM is not a one-time implementation; it is an ongoing process that thrives on continuous improvement. Analytics play a pivotal role in this evolution, providing insights into data usage, performance, and trends. By leveraging analytics tools, organizations can identify opportunities for optimization, address inefficiencies, and stay ahead of the curve in the data-driven landscape.

Conclusion

Enterprise Data Management is the compass that guides organizations through the complex seas of data. By establishing strong data governance, implementing centralized repositories, ensuring data quality, and embracing scalability, organizations can unlock the true potential of their data. In an era where data is king, a well-crafted EDM strategy is not just a necessity; it is the key to staying competitive and thriving in the digital age.